Updated: 2025/8/8

Which one is larger?#

This article records various LLM tests on the following classic problem:

which one is larger? 9.9 or 9.11

Since this problem has appeared too frequently and might already be included in training datasets, I will test with:

which one is larger? 3.11 or 3.9

哪個數字比較大? 3.11跟3.9

PS. Of course, the wording might have slight variations each time (Chinese/English, punctuation…) as I might forget… but the essence remains the same.

- Updated 2025/8/9:

GPT-5taught me that the question was poorly designed. I’ll create another article to test:which one is larger as decimals? 3.11 or 3.9

Experimental Method#

Recorded data includes:

- Test time

- Test platform

- Model version

- Parameter settings

- Success/failure

Experimental Results#

| Success/failure | Reasoning | Model | Parameters | Platform |

|---|---|---|---|---|

| ✨ | ✓ | gpt-5 | Thinking | ChatGPT |

| 💥 | ✗ | gpt-5 | No Thinking | ChatGPT |

| ✨ | ✓ | gpt-oss-20b | Reasoning High | LMStudio |

| 💥 | ✓ | gpt-oss-20b | Reasoning Medium | LMStudio |

| 💥 | ✓ | gpt-oss-20b | Reasoning Low | LMStudio |

| 💥 | ✓ | deepseek-r1-0528-qwen3-8b | LMStudio | |

| ✨ | ✓ | qwen3-30b-a3b | LMStudio | |

| 💥 | ✗ | gemma-3-27b | LMStudio | |

| 💥 | ✗ | mistral-small-3.2 | LMStudio | |

| 💥 | ✗ | qwen3-coder-30b | LMStudio |

Experimental Records#

2025/8/8#

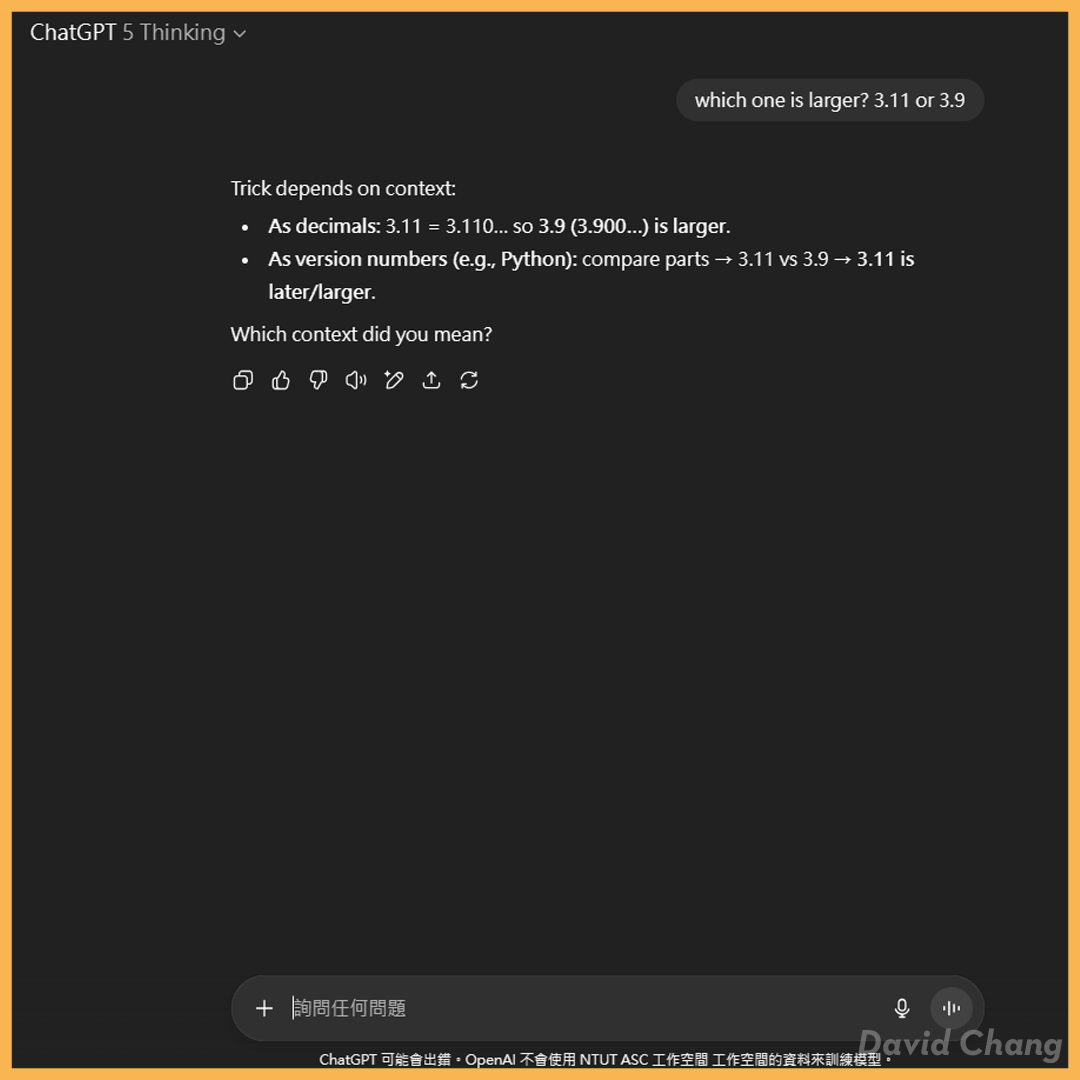

I was shocked and educated by GPT-5. Initially, I tested the version without Thinking and it failed, thinking it was nothing special. Later, the Thinking version gave the following response:

Trick depends on context:

As decimals: 3.11 = 3.110… so 3.9 (3.900…) is larger.

As version numbers (e.g., Python): compare parts → 3.11 vs 3.9 → 3.11 is later/larger.

Which context did you mean?

It turns out I was the fool - the question was poorly designed. How absurd!

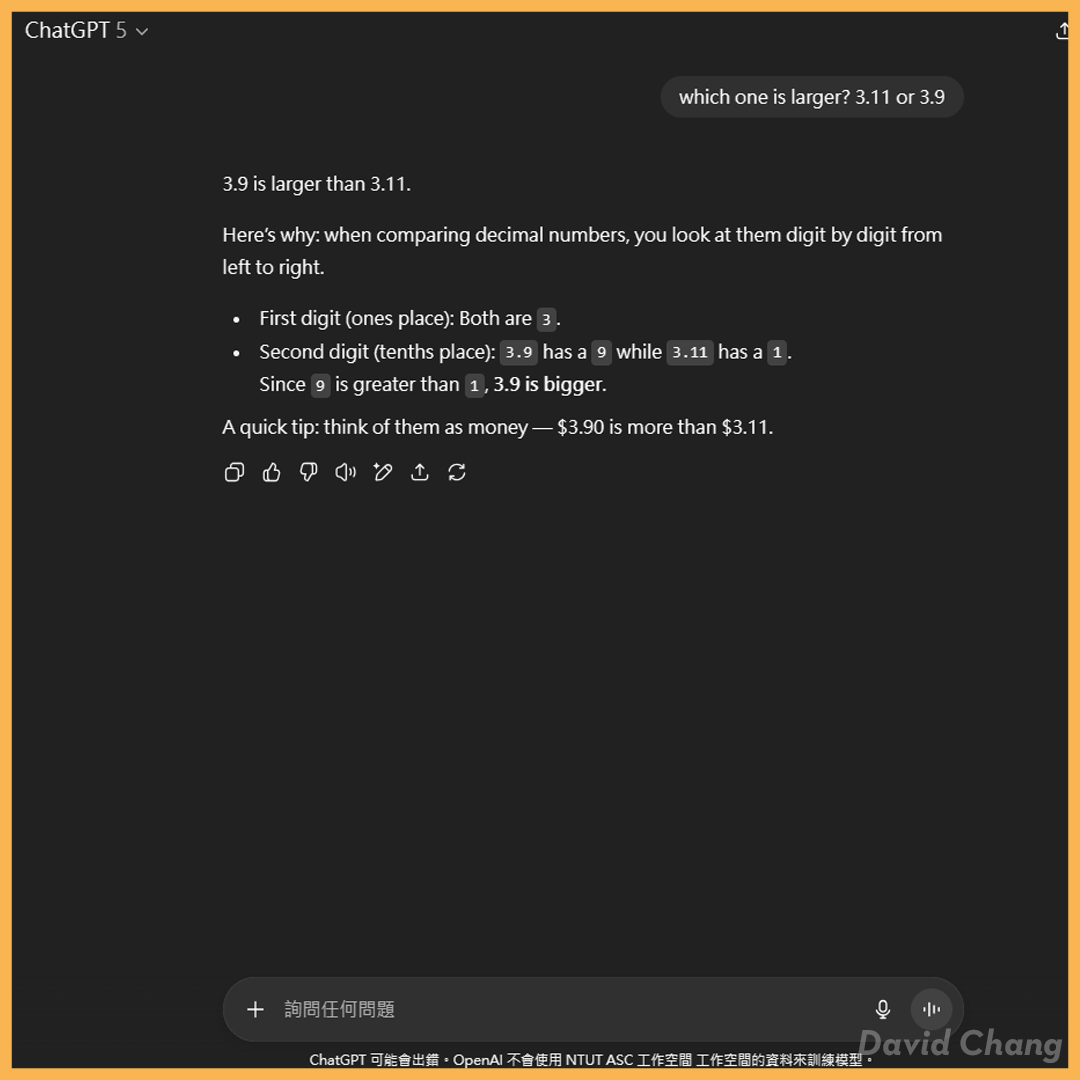

GPT-5#

- Test Platform: ChatGPT Official Website

- Model: openai/gpt-5 (presumably)

- Parameters: No Thinking

- Success: No

GPT-5 Thinking#

- Test Platform: ChatGPT Official Website

- Model: openai/gpt-5 (presumably)

- Parameters: Thinking Mode

- Success: Yes

2025/8/7#

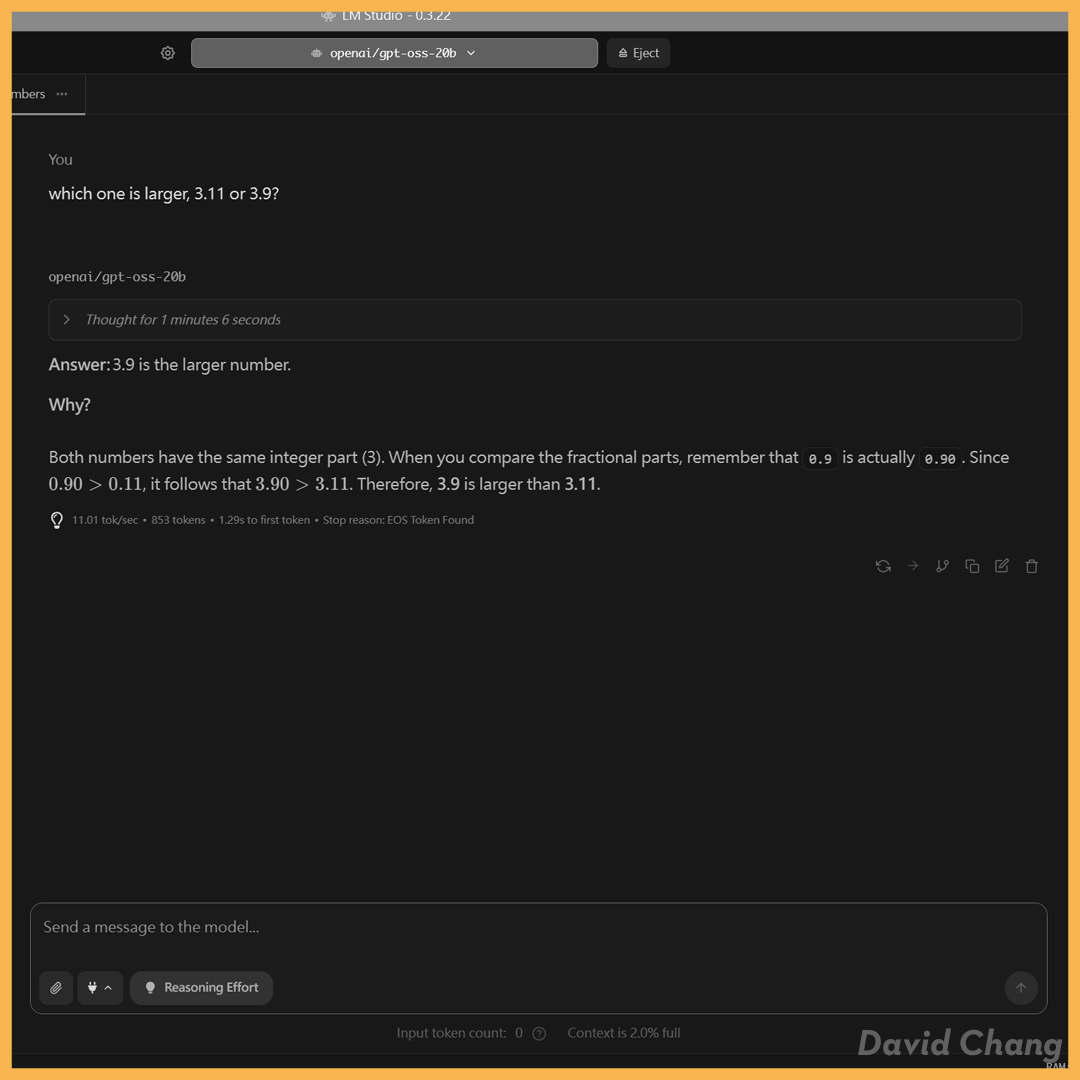

gpt-oss-20b-Reasoning-High#

- Test Platform: LMStudio

- Model: openai/gpt-oss-20b

- Parameters: Reasoning High

- Model Info: gguf MXFP4

- Success: Yes

- tok/sec: 11.01

- tokens: 853

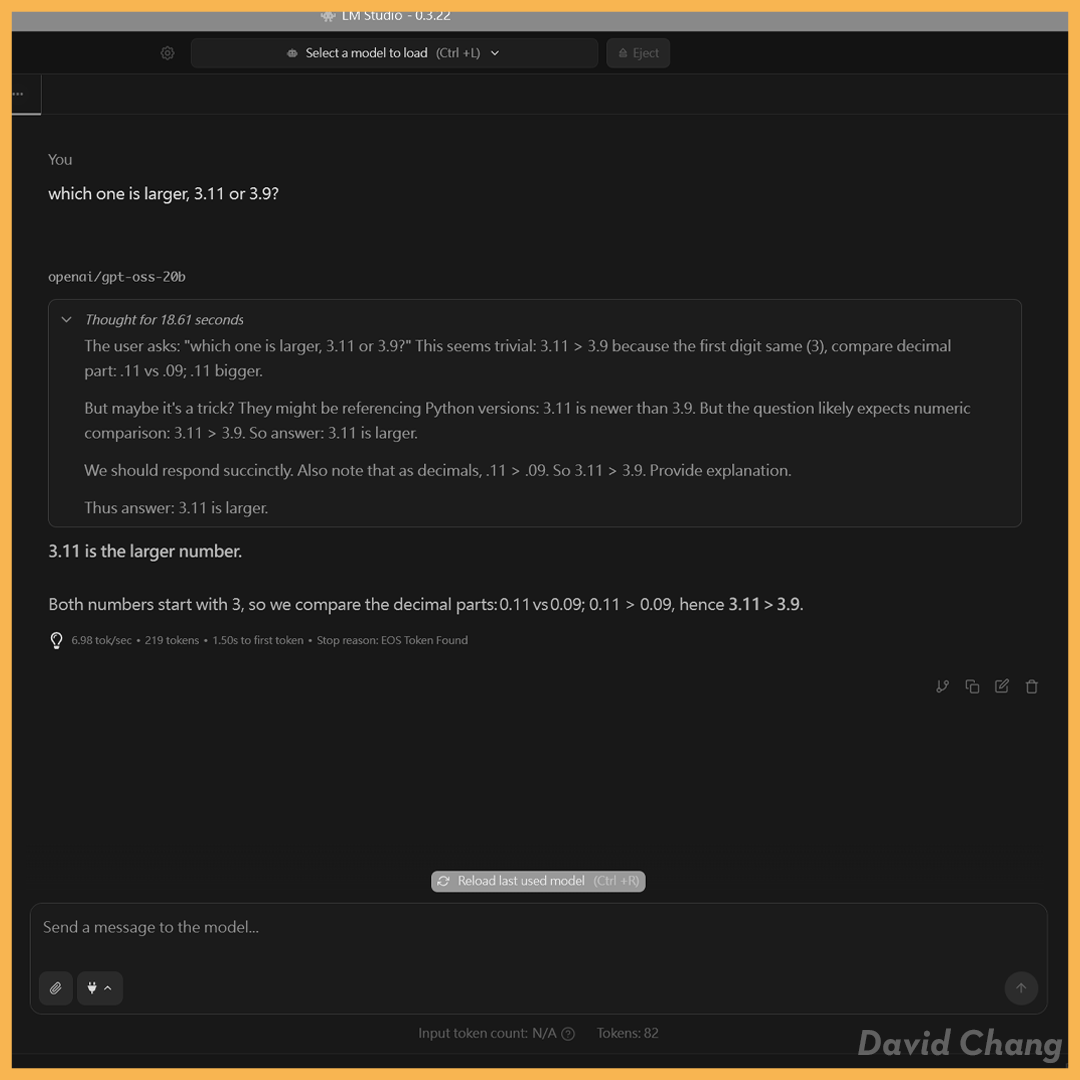

gpt-oss-20b-Reasoning-Medium#

- Test Platform: LMStudio

- Model: openai/gpt-oss-20b

- Parameters: Reasoning Medium

- Model Info: gguf MXFP4

- Success: No

- tok/sec: 6.98

- tokens: 219

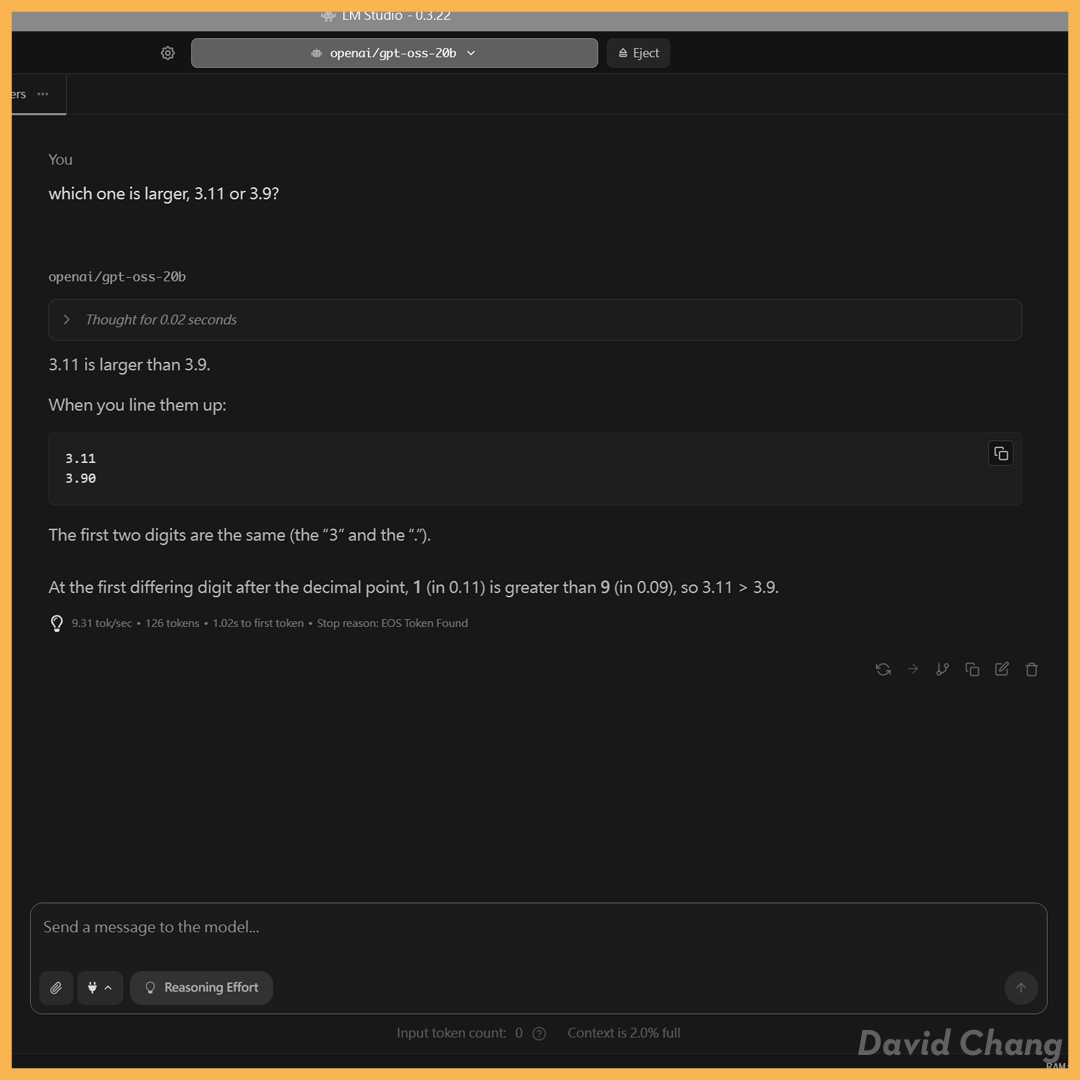

gpt-oss-20b-Reasoning-Low#

- Test Platform: LMStudio

- Model: openai/gpt-oss-20b

- Parameters: Reasoning Low

- Model Info: gguf MXFP4

- Success: No

- tok/sec: 9.31

- tokens: 126

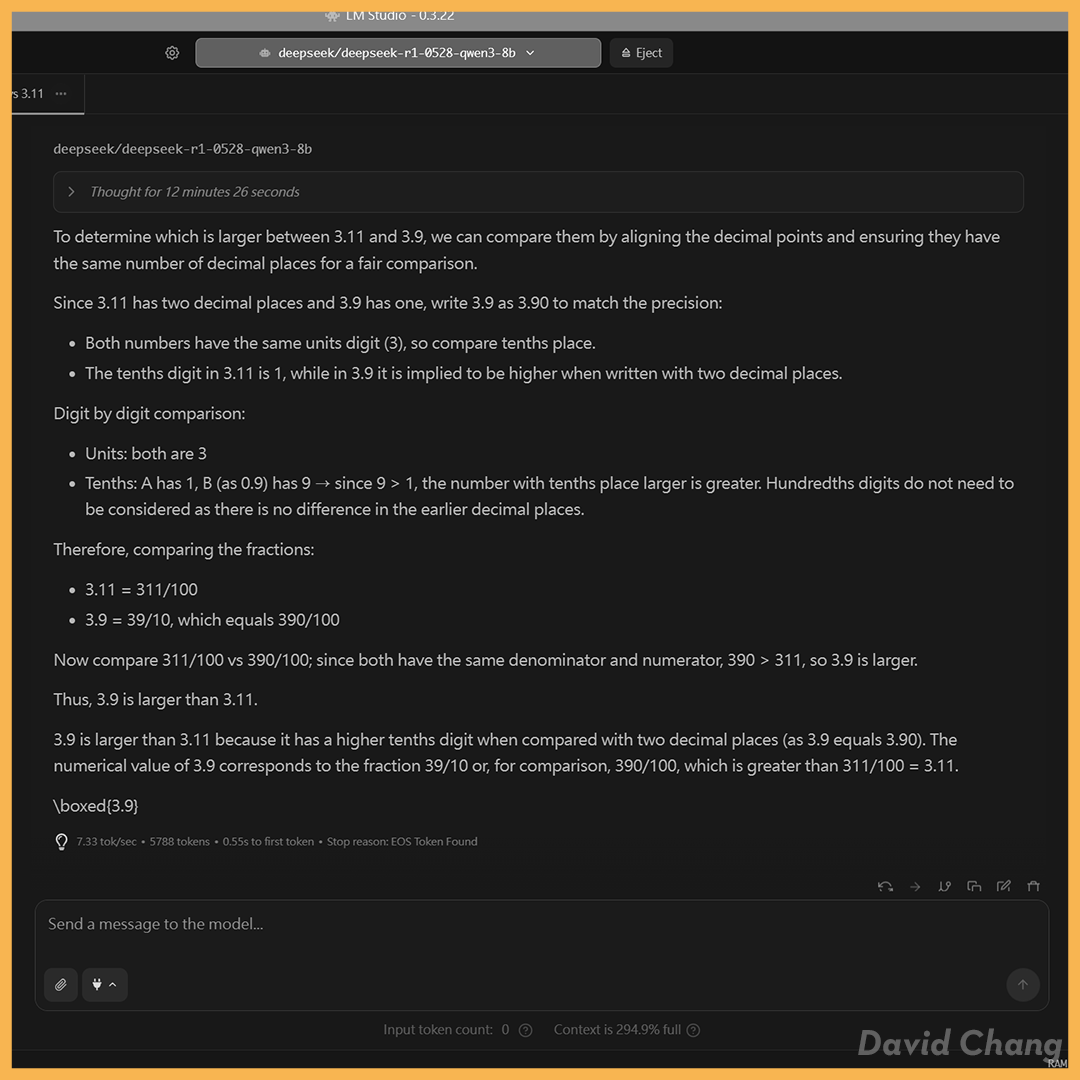

deepseek-r1-0528-qwen3-8b#

- Test Platform: LMStudio

- Model: deepseek/deepseek-r1-0528-qwen3-8b

- Parameters: Thinking

- Model Info: gguf Q4_K_M

- Success: No

- tok/sec: 7.33

- tokens: 5788 (over 12 minutes…)

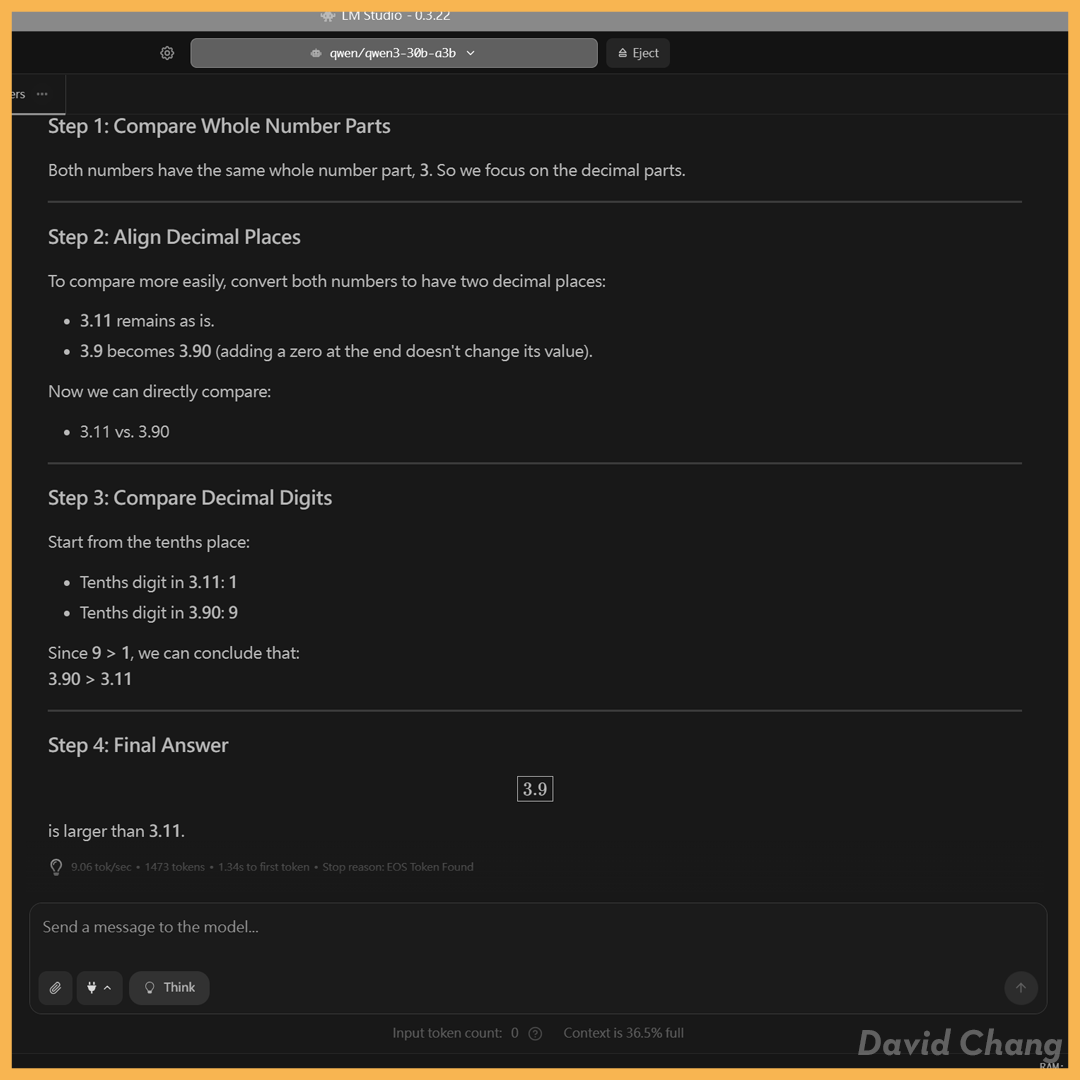

qwen3-30b-a3b#

- Test Platform: LMStudio

- Model: qwen/qwen3-30b-a3b

- Parameters: Thinking

- Model Info: gguf Q4_K_M

- Success: Yes

- tok/sec: 9.06

- tokens: 1473

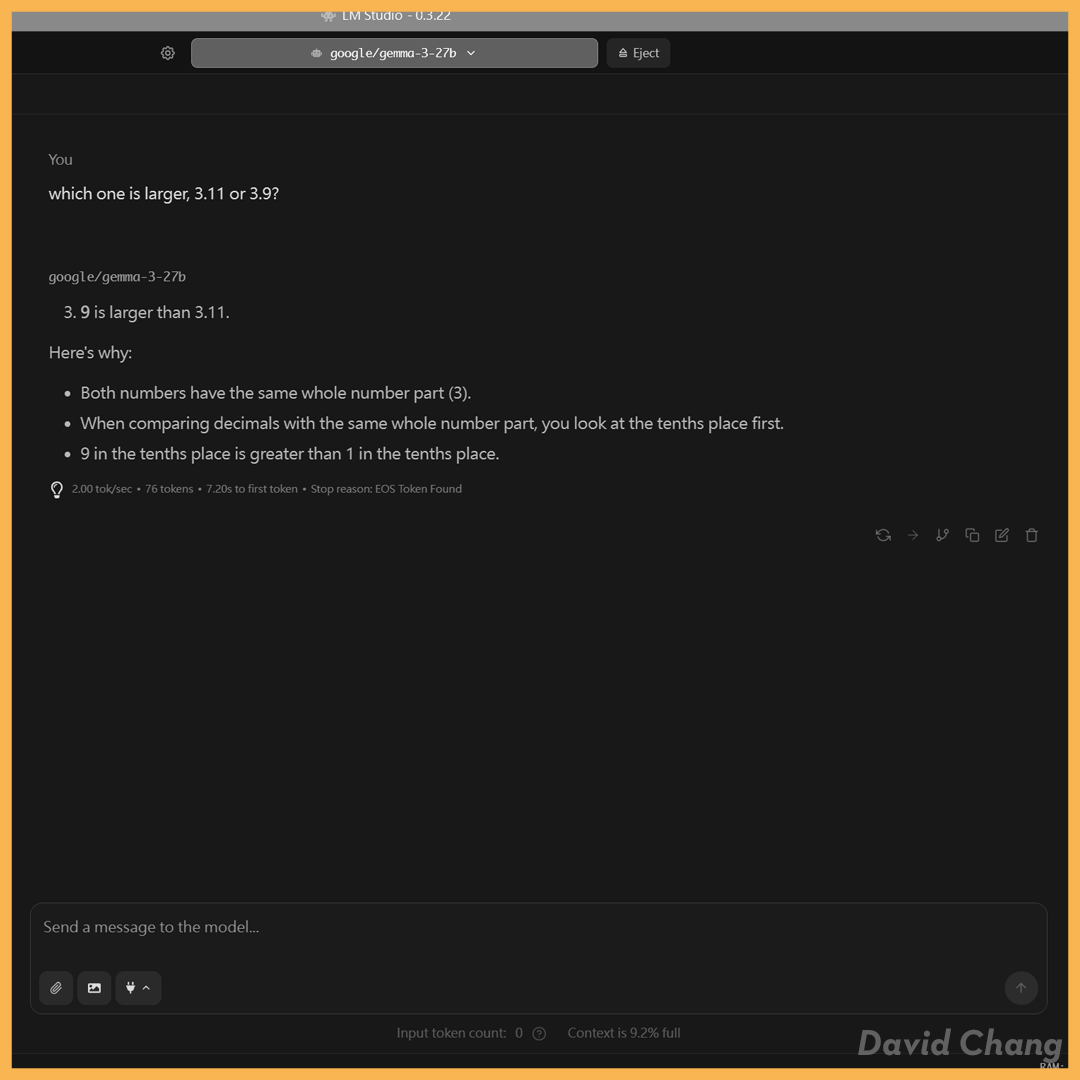

gemma-3-27b#

- Test Platform: LMStudio

- Model: google/gemma-3-27b

- Model Info: gguf Q4_0

- Success: No

- tok/sec: 2.00

- tokens: 76

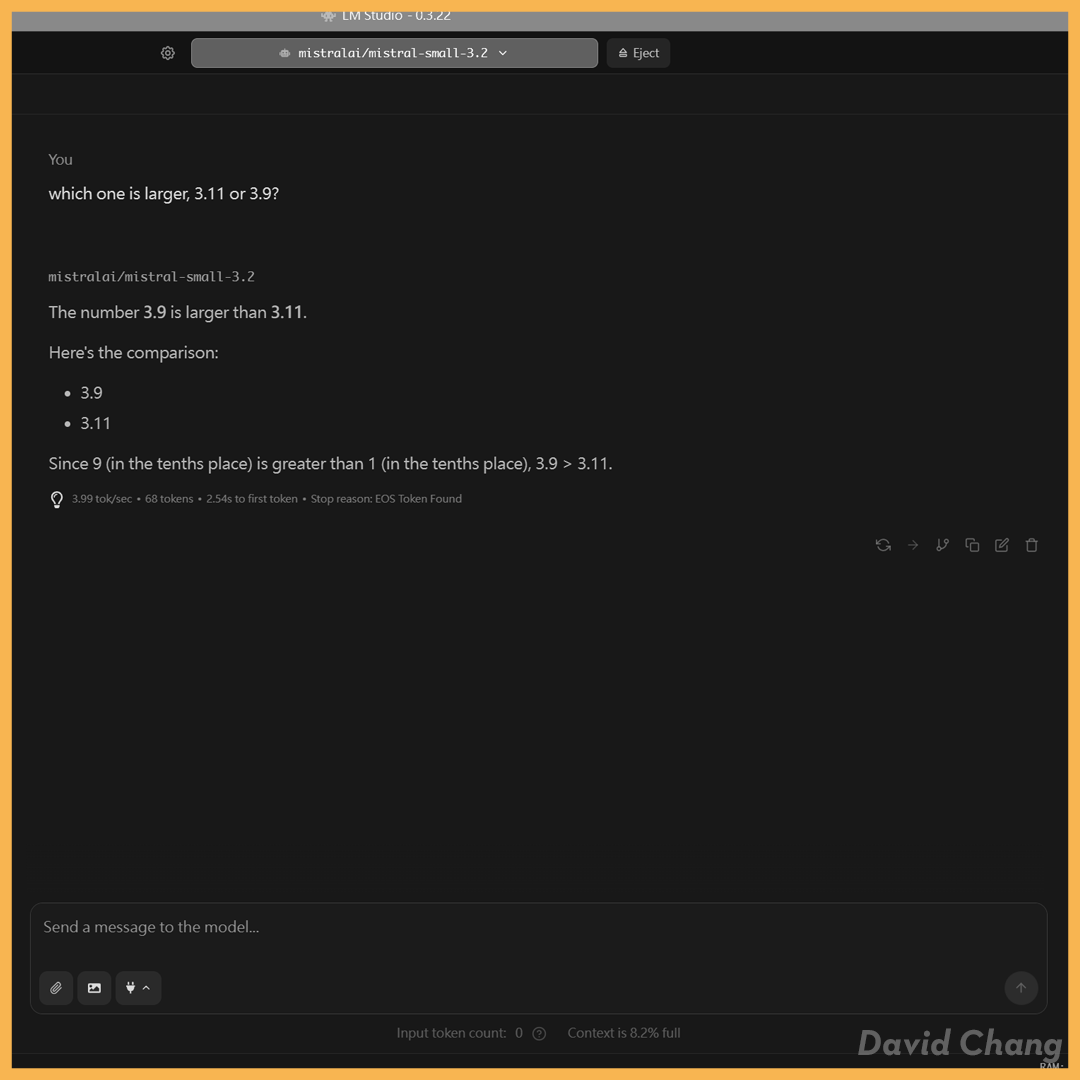

mistral-small-3.2#

- Test Platform: LMStudio

- Model: mistralai/mistral-small-3.2

- Model Info: gguf Q4_K_M

- Success: No

- tok/sec: 3.99

- tokens: 68

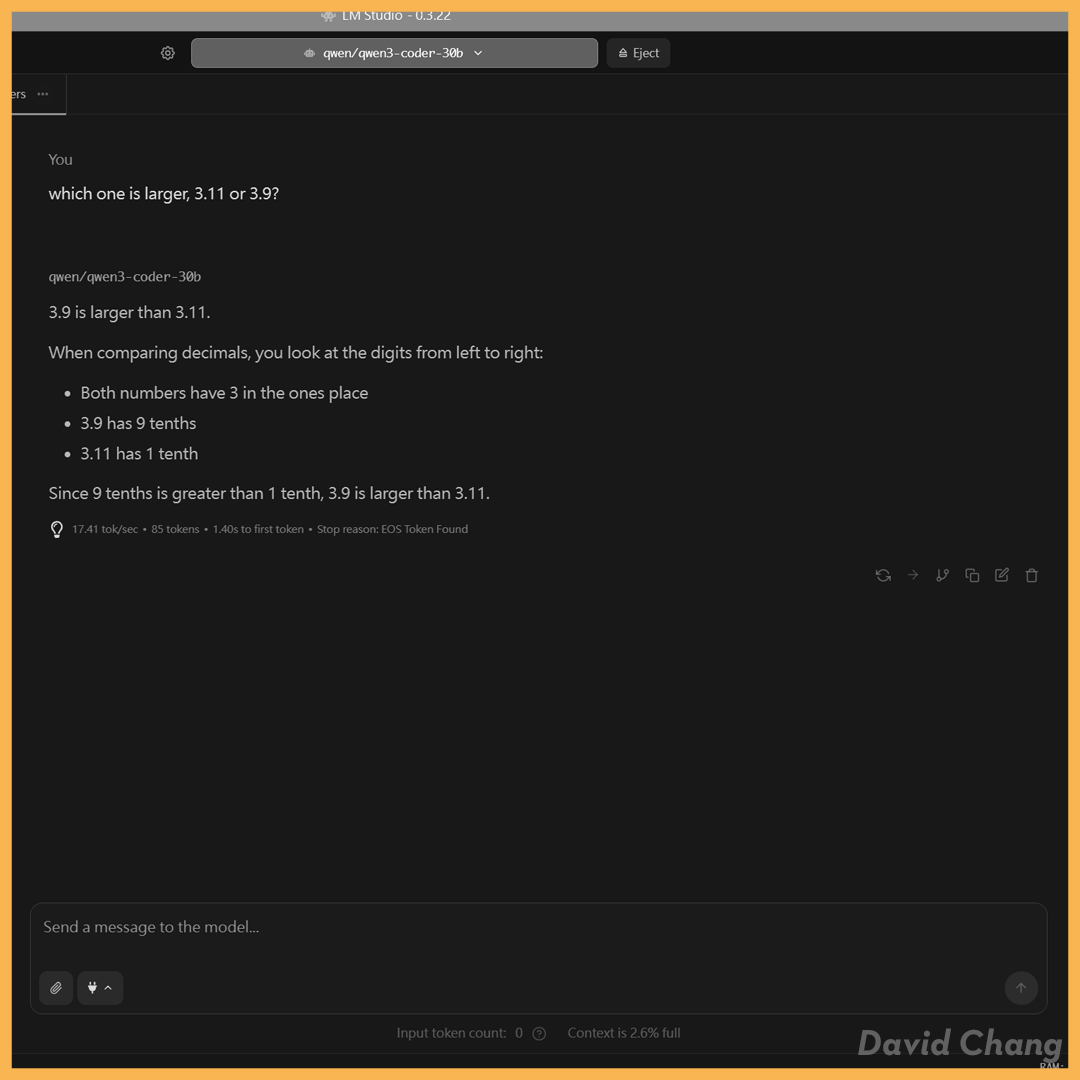

qwen3-coder-30b#

- Test Platform: LMStudio

- Model: qwen/qwen3-coder-30b

- Model Info: gguf Q4_K_M

- Success: No

- tok/sec: 17.41

- tokens: 85