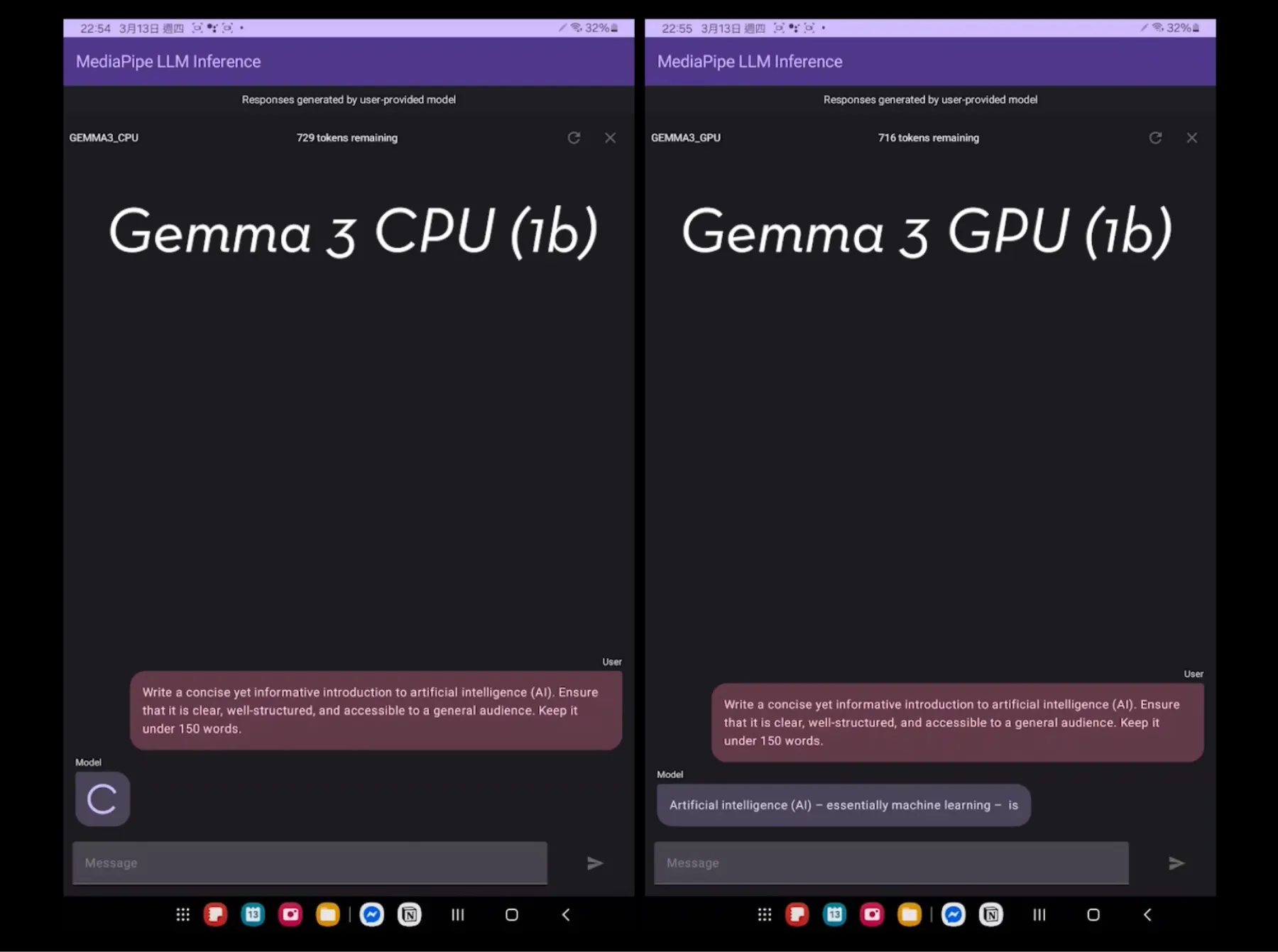

I recently did a quick, casual test of several AI models on my Samsung Tab S9 to see how practical running local AI inference directly on mobile devices could be — especially focusing on Gemma 3’s new performance enhancements. I followed Google’s official guide for setting up Gemma 3 with MediaPipe LLM inference.

Test Setup#

- Device: Samsung Tab S9 (8GB RAM, Snapdragon® 8 Gen 2)

- Environment: MediaPipe LLM Inference

- Models:

- Gemma 3 (1B) CPU & GPU

- PHI-4-mini-instruct (CPU)

- DeepSeek-R1-Distill-Qwen-1.5B (CPU)

Performance Results#

Tokens Per Second#

- Gemma 3 GPU: 52.4 tokens/sec

- Gemma 3 CPU: 42.8 tokens/sec

- DeepSeek-R1 CPU: 15.7 tokens/sec

- PHI-4-mini CPU: 4.5 tokens/sec

Time to Generate a 150-word Prompt#

- Gemma 3 GPU: 3.3 sec

- Gemma 3 CPU: 5.4 sec

- DeepSeek-R1 CPU: 34.3 sec

- PHI-4-mini CPU: 45.8 sec

Reasoning Test: “All but Three”#

To briefly evaluate logical reasoning, I asked the models:

Solve the following problem and explain your reasoning step by step: A farmer has 10 sheep. All but three run away. How many sheep does he have left?

The correct answer is 3, but surprisingly, every model answered incorrectly:

- Gemma 3 (CPU & GPU): answered 7

- PHI-4-mini and DeepSeek-R1: answered 7

Although these are small models, it’s still funny to see them miss the linguistic nuance in this simple question.

Final Thoughts#

Testing these local AI models on a mobile device highlights just how powerful Gemma 3 is, especially with GPU acceleration. Local inference on mobile is practical and effective, but this casual experiment also shows that smaller models still have clear limitations, particularly in nuanced logical reasoning.